java做爬虫很简单没有你想想的那么难,基本就是请求一个网址读取网站的源码,然后根据源码用一些工具来获取你需要的部分。

请求url→读取html源码→源码的处理→需要获得的部分

准备工具

下载地址:http://hc.apache.org/downloads.cgi 我们这次用 4.5.2版本

解压后将lib下所有jar包放到项目中

jsoup下载地址:https://jsoup.org/download

jsoup 是一款Java 的HTML解析器,可直接解析某个URL地址、HTML文本内容。它提供了一套非常省力的API,可通过DOM,CSS以及类似于jQuery的操作方法来取出和操作数据。

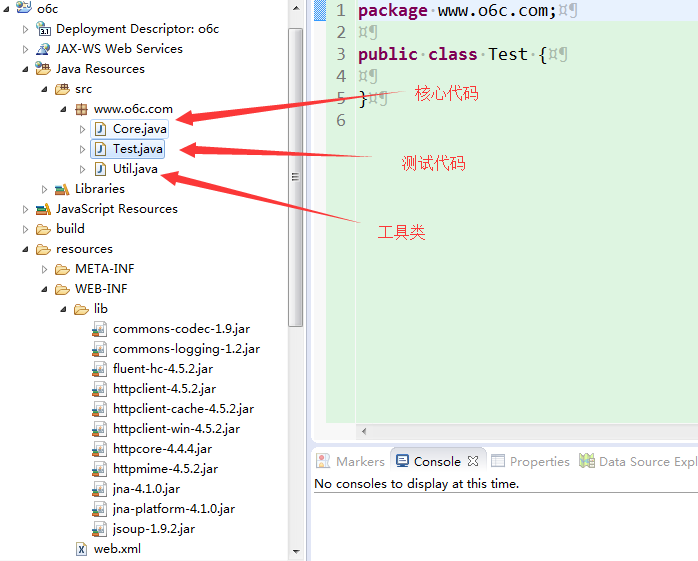

架构图

接下来就在代码中注释了

Util类

package www.o6c.com;

import java.io.ByteArrayOutputStream;

import java.io.File;

import java.io.FileOutputStream;

import java.io.InputStream;

import java.net.HttpURLConnection;

import java.net.URL;

/**

* 爬虫工具类

* @author 江风成

*

*/

public class Util {

/**

* 判断字符串的编码格式

* @param str

* @return

*/

public static String getEncoding(String str) {

String encode = “GB2312”;

try {

if (str.equals(new String(str.getBytes(encode), encode))) {

String s = encode;

return s;

}

} catch (Exception exception) {

}

encode = “ISO-8859-1”;

try {

if (str.equals(new String(str.getBytes(encode), encode))) {

String s1 = encode;

return s1;

}

} catch (Exception exception1) {

}

encode = “UTF-8”;

try {

if (str.equals(new String(str.getBytes(encode), encode))) {

String s2 = encode;

return s2;

}

} catch (Exception exception2) {

}

encode = “GBK”;

try {

if (str.equals(new String(str.getBytes(encode), encode))) {

String s3 = encode;

return s3;

}

} catch (Exception exception3) {

}

return “”;

}/**

* 将图片写入到磁盘

*

* @param img

* 图片数据流

* @param fileName

* 文件保存时的名称

*/

public static void writeImageToDisk(byte[] img, String fileName) {

try {

File file = new File(“D:\\DAGAIER\\” + fileName);

if (!file.exists()) {

// 先创建文件所在的目录

file.getParentFile().mkdirs();

}

FileOutputStream fops = new FileOutputStream(file);

fops.write(img);

fops.flush();

fops.close();

System.out.println(“图片已经写入到D盘”+fileName);

} catch (Exception e) {

e.printStackTrace();

}

}/**

* 根据地址获得数据的字节流

*

* @param strUrl

* 网络连接地址

* @return

*/

public static byte[] getImageFromNetByUrl(String strUrl) {

try {

URL url = new URL(strUrl);

HttpURLConnection conn = (HttpURLConnection) url.openConnection();

conn.setRequestProperty(“User-Agent”, “Mozilla/4.0 (compatible; MSIE 5.0; Windows NT; DigExt)”);

conn.setRequestMethod(“GET”);

conn.setConnectTimeout(5 * 1000);

InputStream inStream = conn.getInputStream();// 通过输入流获取图片数据

byte[] btImg = readInputStream(inStream);// 得到图片的二进制数据

return btImg;

} catch (Exception e) {

e.printStackTrace();

}

return null;

}/**

* 从输入流中获取数据

*

* @param inStream

* 输入流

* @return

* @throws Exception

*/

public static byte[] readInputStream(InputStream inStream) throws Exception {

ByteArrayOutputStream outStream = new ByteArrayOutputStream();

byte[] buffer = new byte[1024];

int len = 0;

while ((len = inStream.read(buffer)) != -1) {

outStream.write(buffer, 0, len);

}

inStream.close();

return outStream.toByteArray();

}}

Core类

package www.o6c.com;

import java.io.IOException;

import org.apache.http.HttpEntity;

import org.apache.http.client.config.RequestConfig;

import org.apache.http.client.methods.CloseableHttpResponse;

import org.apache.http.client.methods.HttpGet;

import org.apache.http.impl.client.CloseableHttpClient;

import org.apache.http.impl.client.HttpClients;

import org.apache.http.util.EntityUtils;

import org.jsoup.Jsoup;

import org.jsoup.nodes.Document;

/**

* 核心代码

* @author 江风成

*

*/

public class Core {

private static RequestConfig requestConfig = RequestConfig.custom().setSocketTimeout(15000).setConnectTimeout(15000)

.setConnectionRequestTimeout(15000).build();/**

* 读取html的代码

*

* @param url

* @return

*/

public String readingHtmlSource(String url) {// 使用GET方法,如果服务器需要通过HTTPS连接,那只需要将下面URL中的http换成https

HttpGet httpGet = new HttpGet(url);

// 使用POST方法

CloseableHttpClient httpClient = null;

CloseableHttpResponse response = null;

HttpEntity entity = null;

String htmlContent = null;

try {

// 创建默认的httpClient实例.

httpClient = HttpClients.createDefault();

httpGet.setConfig(requestConfig);

// 执行请求

response = httpClient.execute(httpGet);

entity = response.getEntity();

String responseContent = EntityUtils.toString(entity, Util.getEncoding(entity.toString()));

htmlContent = new String(responseContent.getBytes(Util.getEncoding(responseContent)), “UTF-8”);

} catch (Exception e) {

e.printStackTrace();

} finally {

try {

// 关闭连接,释放资源

if (response != null) {

response.close();

}

if (httpClient != null) {

httpClient.close();

}

} catch (IOException e) {

e.printStackTrace();

}

}

return htmlContent;

}

/**

* 获取想要的规则

* @param html

* @return

*/

public String selectHtml(String html) {

//将html字符转转换为Jsoup能解析的

Document doc = Jsoup.parse(html);

//这是获取某个标签下标题

String title = doc.getElementsByClass(“tr1”).get(0).getElementsByTag(“h4”).text();

//获取这个div下有多少张图片

int imgsize = doc.getElementsByClass(“tpc_content”).get(0).getElementsByTag(“”).size();

for (int i = 0; i < imgsize; i++) {

//取出图片的链接来下载到本地

String img = doc.getElementsByClass(“tr3”).get(i).getElementsByTag(“a”).get(0).attr(“href”);

byte[] btImg = Util.getImageFromNetByUrl(img);

if (null != btImg && btImg.length > 0) {

String prefix = img.substring(img.lastIndexOf(“.”));

String fileName = title+”\\”+i+prefix;

Util.writeImageToDisk(btImg, fileName);

} else {

System.out.println(“没有从该连接获得内容”);

}

}

return null;

}

}

Test类

package www.o6c.com;

/**

* 测试类

* @author 江风成

*

*/

public class Test {

public static void main(String[] args) {

Core core =new Core();

core.readingHtmlSource(“www.o6c.com”);

}

}

大家如果有不懂的给我留言,原创不容易转载请注明谢谢!